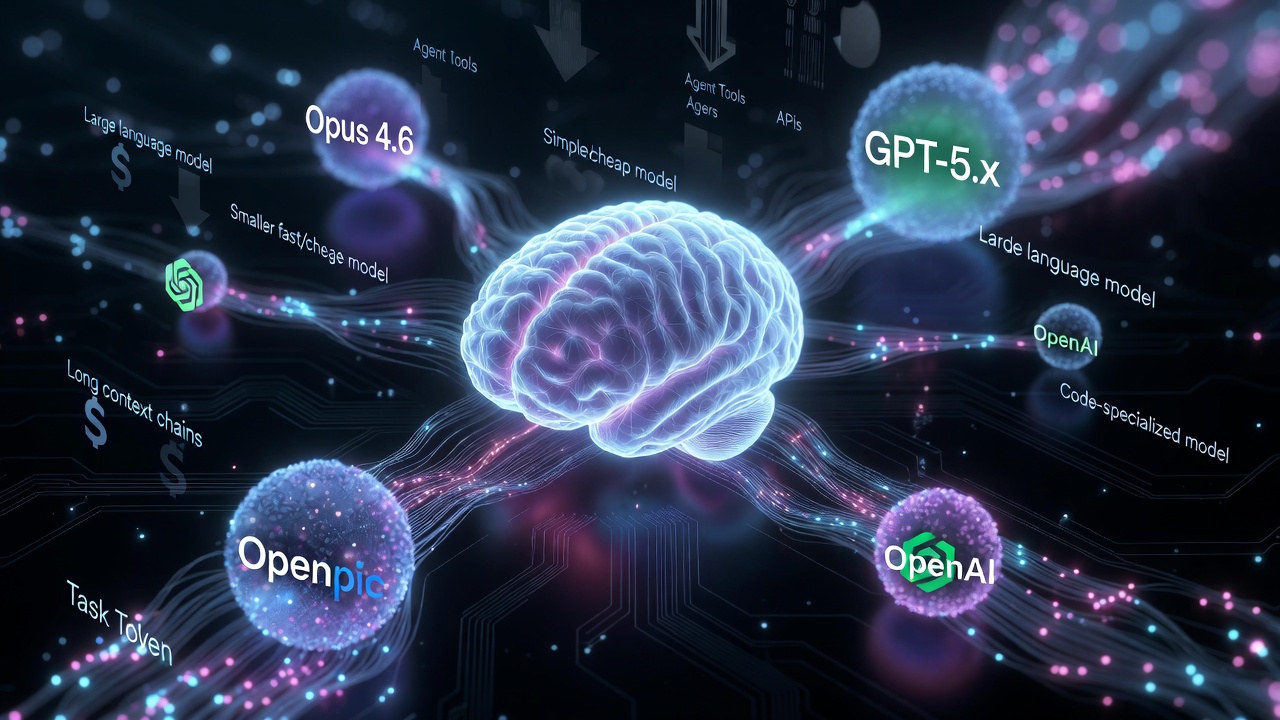

Claude Opus 4.6 launched on February 5th, 2026 — the same day OpenAI dropped GPT-5.3-Codex. The timing wasn’t subtle. But beyond the benchmark race, the release landed at a moment that reveals where agentic AI is actually heading, and why the next critical layer isn’t a better model — it’s a smarter way to choose between them.

The OpenClaw Effect

Opus 4.6 didn’t arrive in a vacuum. OpenClaw — Peter Steinberger’s open-source autonomous agent framework — went viral in late January, amassing over 124,000 GitHub stars and spawning its own community conference within weeks. Steinberger’s recommendation was unambiguous: run it on Anthropic’s Opus for long-context strength and prompt-injection resistance. The project proved massive demand for what its creator calls “AI that actually does things.”

It also proved something less comfortable: the economics are brutal. Each agent loop reloads tens of thousands of tokens of context. Heartbeats and cron jobs hit the model every thirty minutes. Users running OpenClaw on frontier models reported bills in the hundreds of dollars over a single weekend. And users who tried cheaper models to control costs found that 40–95% of the capability vanished. The model is the product. The framework is packaging.

Opus 4.6’s new features read like a direct response to these pain points. The one-million-token context window addresses context rot in long-running agent sessions. Adaptive thinking — four configurable effort levels replacing the old binary extended-thinking toggle — means routine agent heartbeats don’t need to burn Opus-tier reasoning tokens. Context compaction summarizes older conversation tokens to keep agents running longer without hitting limits. And agent teams allow parallel coordination across tasks, reducing the sequential bottleneck that made complex workflows slow and expensive.

Whether Anthropic timed the release around OpenClaw’s virality or simply benefited from it, the result is the same: the model that the most popular open-source agent framework already recommends just got substantially better at exactly what that framework demands.

The Consumer Innovation Gap

There’s a deeper dynamic at play beneath the hype cycle. Consumer hardware is being squeezed out of the capable-local-model game. DDR5 prices are climbing. Nvidia has little incentive to put serious VRAM on consumer GPUs when datacenter margins on H100s and B200s are vastly more profitable. Running a genuinely capable local model — 70B+ parameters, even quantized — is drifting out of hobbyist range.

This creates a forced choice for the individual power user: accept the capability ceiling of what runs on affordable local hardware, or route through cloud APIs to frontier models — accepting their security model, their rate limits, their terms of service, and their cost structure along with their capabilities.

Enterprise buyers face their own constraints, but different ones. Compliance regimes, procurement cycles, SOC 2 requirements, and institutional risk aversion mean they can’t deploy autonomous agents with broad system permissions even when the technology supports it. Every tool needs audit trails, sandboxing, and legal review.

The individual power user operates under a fundamentally different threat model. They own the data. They own the infrastructure. When they grant an agent elevated permissions on their own hardware, they’re making an informed decision about their own attack surface with bounded consequences. This asymmetry creates a real innovation gap. The capabilities that make enterprise security teams lose sleep — broad filesystem access, autonomous code execution, persistent background agents, cross-service API integration without human-in-the-loop approval — are exactly what makes agentic workflows transformative. Enterprise will get there eventually, through slow, sandboxed, compliance-gated rollouts. Consumer power users who accept the security tradeoffs can experiment now.

This is the same pattern that has played out repeatedly in tech history. Linux on the desktop was a security-compromised mess for years before it hardened into enterprise-grade RHEL. Docker was “don’t run this in production” before Kubernetes made it deployable at scale. The consumer and hobbyist tier absorbs the risk, does the exploratory innovation, and enterprise harvests the proven patterns later.

The Case for Intelligent Routing

This is where the threads converge. If the hardware squeeze pushes power users toward cloud APIs, and cloud APIs offer a growing menu of models at different capability and price points, the next critical innovation isn’t a better model — it’s a smarter orchestration layer that chooses between them.

Today, providers like OpenRouter function essentially as a load balancer with a menu. You pick the model, it proxies the request. But what agentic workflows actually need is intent-aware routing — a layer that makes model selection an intelligent decision on every call:

- Route simple classification and extraction to a cheap, fast model.

- Route complex multi-step reasoning to a frontier model like Opus or GPT-5.2.

- Route code generation to whatever currently leads the coding benchmarks.

- Route security-sensitive operations to models with stronger prompt-injection resistance.

- Factor in cost, latency, context window requirements, and capability thresholds per request.

This is essentially Opus 4.6’s adaptive thinking concept lifted up a layer. Instead of one model deciding how hard to think, a routing layer decides which model should think at all. For agentic workflows — where a single task might involve dozens of LLM calls with wildly different complexity profiles — the impact on cost and performance could be transformative. The difference between an agent that burns $500 in a weekend and one that runs sustainably is largely about not invoking frontier-tier reasoning for routine “nothing has changed” heartbeat checks.

Some of this is emerging in pieces. OpenRouter offers basic cost and speed sorting. Companies like Martian and Not Diamond are working on model routing. Claude’s own adaptive thinking and effort levels are the single-vendor version of the same idea. But nobody has nailed the full loop yet — especially not for agentic workflows.

The Barriers

Several hard problems stand between here and truly intelligent routing:

Routing latency. Adding a classification step before every LLM call introduces overhead. The router needs to be fast enough that it doesn’t negate the cost savings it creates — a non-trivial constraint when agentic loops are already latency-sensitive.

Context continuity. If you start a reasoning chain in Opus and need to continue it in a cheaper model, the handoff isn’t clean. Different models have different internal representations, system prompt sensitivities, tool-calling formats, and failure modes. Seamless model-switching mid-task remains an unsolved problem.

The bootstrapping problem. You need a model to decide which model to use, which introduces its own cost and latency. The routing decision itself must be cheap and fast enough to justify its existence — likely requiring a small, purpose-built classifier rather than a general-purpose LLM.

Capability assessment is dynamic. Model rankings shift with every release. Today’s best code generator might not be tomorrow’s. The routing layer needs to stay current with benchmark data and account for the gap between benchmarks and real-world performance, which as any practitioner knows, can be substantial.

Provider reliability and rate limits. Intelligent routing across multiple providers means managing multiple API keys, different rate limit policies, varying uptime guarantees, and inconsistent error handling. The orchestration complexity compounds quickly.

Where This Goes

The future of agentic AI probably isn’t “pick one model provider and go all-in.” It’s a heterogeneous model ecosystem where the intelligence lives in the orchestration layer. The people building and refining that layer will be the power users and independent developers who understand the tradeoffs well enough to tune it — not enterprise procurement teams looking for a single vendor to call.

Whether intelligent routing emerges from providers like OpenRouter evolving their platform, from agent frameworks like OpenClaw building it into their architecture, or from a standalone project purpose-built for the problem, the convergence pressure is real. The models are commoditizing faster than anyone expected. The lasting value will accrue to whoever solves the routing problem.